How NLP is bridging the gap between technology and human comprehension.

We recently caught up with Jake Pedley, the brains behind our RulesLab NLP application, to discuss the importance of natural language processing (NLP), and why we’ve chosen to harness it in our product suite.

Jake, tell us a bit about what natural language processing is, and how it fits into the whole AI picture.

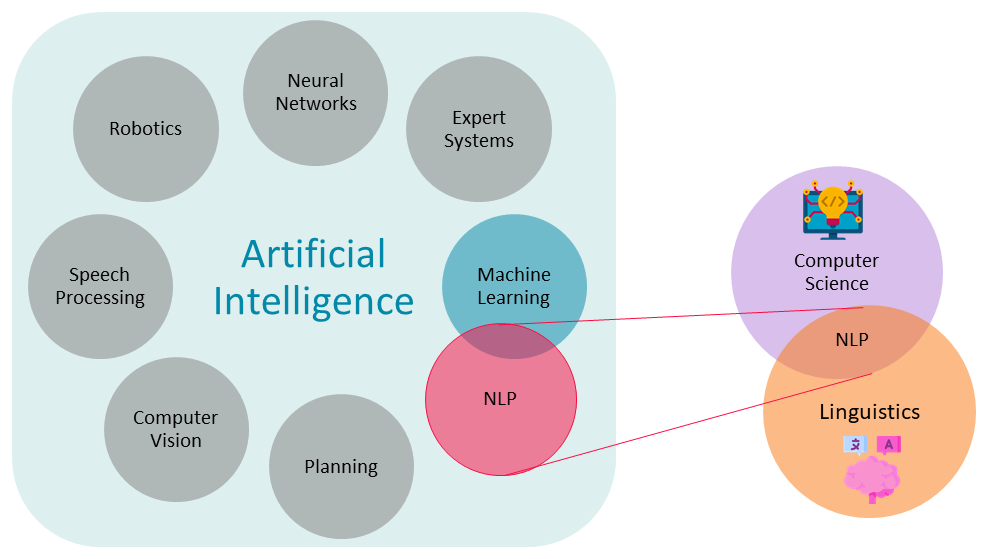

“So, as Nickie touched on in our previous blog post, AI is a field of scientific research intent on creating machines that can perform tasks that were previously only the domain of humans. Admittedly, this definition is incredibly broad, and the definition of what constitutes AI is perpetually moving forward. (As a bit of a cautionary side note, a 2019 survey by venture capital firm MMC found that 40% of European start-ups that are classified as AI companies did not in fact utilise AI).

“Natural language processing, or ‘NLP’, is a particular branch of AI, which sits at the intersection of computer science and linguistics. It aims to interpret the language of everyday life, either written or spoken – what is known as ‘natural language’ in technology-speak. This is no small task – whilst computers are well-suited to processing structured data, human expression is notoriously unstructured and nuanced, often relying on context for its interpretation. But bringing order to this apparent chaos is precisely what NLP sets out to do, using algorithms to process, extract meaning from and perform tasks, based on natural language inputs.

“Given the ubiquitous nature of human language, the use cases for NLP are unsurprisingly broad, including entity extraction, translation, topic classification, text generation, conversational interfaces, and sentiment analysis (it’s entity extraction that we mainly focus on at RulesLab). Many of us encounter NLP every day, through virtual assistants like Alexa or Siri, Gmail’s inbuilt ‘Promotions’ filter, predictive text when composing an SMS or email, and the incessant chatbots that pop up when you’re doing some online shopping. NLP has been adopted in a range of industries, including retail, marketing, finance and healthcare.

“NLP also has some cross-over with another subset of AI, machine learning (ML), which uses algorithms to ‘teach’ machines from past experience, rather than relying on explicit programming. It is this functionality that can improve responses by AI chatbots, as one common example.”

So how does the NLP process actually work?

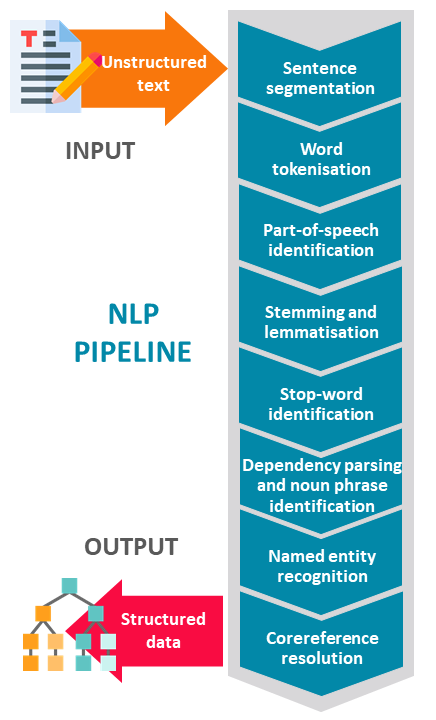

“There are a number of different steps that go into creating an NLP pipeline, which allows a computer to ‘understand’ unstructured language data by breaking it down into its building blocks – what is often called ‘syntactic analysis’.

“The basic elements of syntactic analysis are:

1.Sentence segmentation: Breaking a block of text down into individual sentences.

2.Word tokenisation: Further breaking down these sentences into individual words.

3. Part-of-speech tagging: Categorising words according to grammatical parts of speech e.g. nouns, adjectives, verbs etc.

4. Stemming and lemmatisation: Reducing words to their root form, in order to standardise them.

5. Stop-word identification: Identifying and filtering out commonly used words e.g. prepositions.

6. Dependency parsing and noun phrase identification: Analysing how the words in a sentence relate to one another, and using this information to group together noun phrases that are discussing the same concepts.

7. Named entity recognition: Identifying and labelling words according to pre-defined categories (‘named entities’), e.g. person names, geographical locations, organisation names.

8. Core-reference resolution: Mapping pronouns back to the original entities they are referring to.

If you’re interested in more detail on the NLP pipeline steps and some illustrative examples, check out this easy-to-follow blog post.

“Depending on the desired outcome, these steps can be re-ordered or discarded as necessary. Obviously, these steps require a huge amount of mathematical, technical and linguistic expertise, but there are a number of really great python-based NLP libraries out there to make this functionality readily accessible. At RulesLab for example, we are utilising the spaCy library in our NLP offering.

“NLP can also extend on the above process, using semantic analysis. Semantic analysis aims to look at a piece of text more broadly, in order to gain an understanding of what it means, rather than just how it is structured. Sentiment analysis is a good example of this, using NLP to predict if a series of product reviews are positive, negative or neutral, based on the meaning of the words being used and their relationships with each other through the text. Some people prefer to distinguish semantic analysis from NLP, arguing that it instead belongs to ‘NLU’ (natural language understanding), being more concerned with interpretation, in contrast to the processing focus of syntactic analysis.”

How will NLP be utilised in the RulesLab product suite?

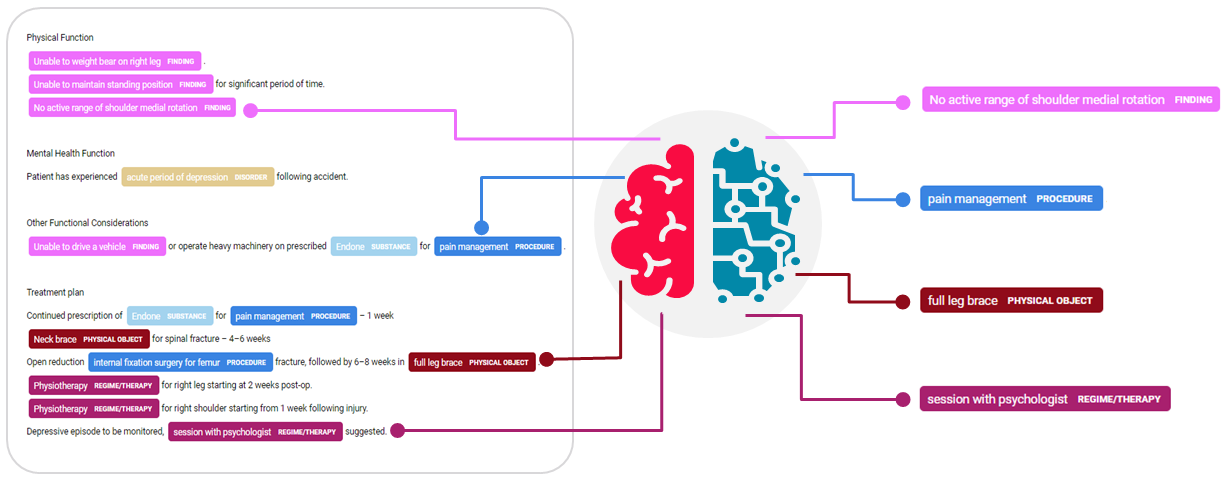

“RulesLab will be harnessing NLP predominantly for entity extraction – identifying and extracting standard terms or codes from natural language information, based on pre-defined language patterns. An example of this may be taking doctor’s notes (which are notoriously unstructured) and returning something like SNOMED codes.

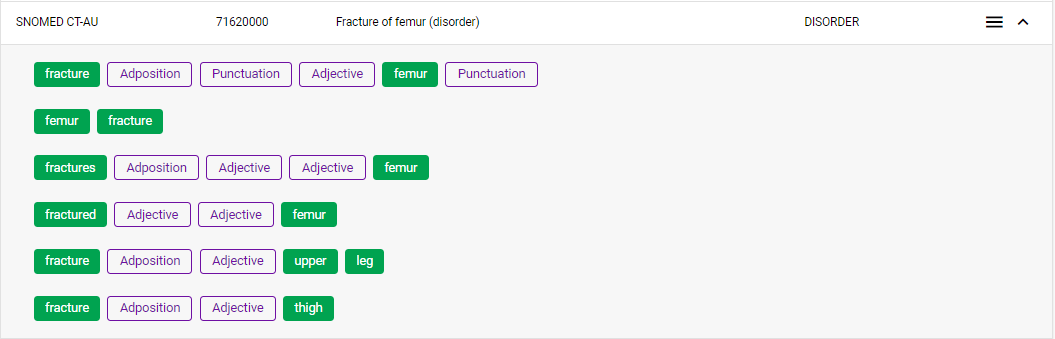

“In the below example, phrases such as ‘femur fracture’, ‘fracture – upper leg’, ‘fracture of femur’ and ‘fracture (femur)’ would all point to the single, standard SNOMED code 71620000, based on the defined patterns of mandatory words and optional grammatical components.

“Of course, healthcare is only one of the many industries that can benefit from this solution – users will be able to define their own specific entity definitions, tailored to their specific area of work.

“Users may only wish to extract the entities of interest, and then run further analysis in their own systems or processes. However, the RulesLab NLP component can also be used in conjunction with the wider RulesLab system, as users may wish to send on the NLP outputs to the RulesLab Engine for processing against rule sets – after all, it is much easier to design effective, accurate rules for the structured data produced by NLP, rather than working with straight natural language information.”

You’ve mentioned healthcare as one of the industries that has adopted NLP-based solutions in their industry. Can you tell us a bit about the current work in that space? And do you think it’s being well utilised, or is there further untapped potential?

“There is a massive amount of untapped potential for NLP and ML more generally in the healthcare industry. Currently in Australian hospitals, most doctors still rely on pen and paper for taking their clinical notes, as computerised solutions are often not efficient enough for them to change. There are then teams of people who manually interpret these notes to codify them for processing by computer systems. At the moment, NLP is used to help interpret and choose the correct codes (assisted coding) to heighten accuracy rates, though more could be done. NLP could assist in this workflow by either using optical character recognition (OCR) to read the handwritten notes and pass it through syntactic analysis, or a named entity recognition (NER) model to obtain the coded information needed for machine processing. Techniques such as text-to-speech could also be used to avoid the hassle of understanding doctor’s handwriting. There is also scope for NLP to play a role in clinical support systems. We anticipate there will be some huge changes in this space in the coming months and years, and are excited to be a part of that journey.”

Follow the link for some insights into how NLP will play a role as voice technology is increasingly adopted in healthcare.